I am a Research Fellow (academic rank in Italy: RTD-A) at the Department of Computer Science of the University of Pisa since January 2023, where I am a member of the A³ Lab.

My research interests include compact data structures, data compression, and algorithm engineering, with a focus on the so-called learned data structures, that is, data structures that exploit machine learning tools to uncover new regularities in the input data and achieve significantly improved space-time trade-offs over traditional ones.

I obtained my PhD from the University of Pisa in February 2022 with a thesis on Learning-based compressed data structures that was awarded the Best PhD thesis in Theoretical Computer Science by the Italian Chapter of the EATCS. Before my current position, I was a postdoc (2022) and PhD student (2018–21) at the University of Pisa, and a visiting researcher at Harvard University (2020).

Results of my research, including my software libraries, have found applications in database systems, information retrieval systems, and bioinformatics tools. Furthermore, I was granted an Italian patent (owned by the University of Pisa).

My research is supported by the EU-funded projects SoBigData.it and SoBigData++. In the past, I was supported by the Multicriteria data structures project funded by the Italian Ministry of University and Research.

Publications

Talks

Service

Program committees:

WSDM 2024, AIME 2024, BIBM 2024, WSDM 2023, BIBM 2023

Organising committees:

SPIRE 2023

Journal reviewer:

IEEE Trans. Knowl. Data Eng., IEEE Trans. Cloud Comput., IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst., VLDB J., J. Inf. Secur. Appl., Software: Pract. Exp., Neurocomputing, PLOS ONE

Conference reviewer:

SIGIR 2024, SPIRE 2023, SAND 2023, ALENEX 2022, DCC 2022, LATIN 2022

Teaching & Supervision

Teacher:

- Information Retrieval (3/6 ECTS). MSc in Computer Science, University of Pisa. AY 2023/24.

- Programmazione e Algoritmica (3/15 ECTS). BSc in Computer Science, University of Pisa. AY 2023/24.

Teaching assistant:

- Algorithm Engineering. MSc in Computer Science, University of Pisa. AYs 2022/23, 2021/22, 2020/21.

- Algoritmica e Laboratorio. BSc in Computer Science, University of Pisa. AY 2018/19.

Co-supervised theses:

- Marco Costa, Engineering data structures for the approximate range emptiness problem, MSc in Computer Science - ICT, 2022.

- Mariagiovanna Rotundo, Compressed string dictionaries via Rear coding and succinct Patricia Tries, MSc in Computer Science - ICT, 2022.

- Antonio Boffa, Spreading the learned approach to succinct data structures, MSc in Computer Science - ICT, 2020.

- Alessio Russo, Learned index per i DB del futuro, BSc in Computer Science, 2020.

- Lorenzo De Santis, On non-linear approaches for piecewise geometric model, MSc in Computer Science - AI, 2019.

Software

LeMonHash

A monotone minimal perfect hash function that learns and leverages the data smoothness.

LZ$\phantom{}_{\boldsymbol\varepsilon}$

Compressed rank/select dictionary based on Lempel-Ziv and LA-vector compression.

LZ-End

Implementation of two LZ-End parsing algorithms.

PrefixPGM

Proof-of-concept extension of the PGM-index to support fixed-length strings.

RearCodedArray

Compressed string dictionary based on rear-coding.

Block-$\boldsymbol\varepsilon$ tree

Compressed rank/select dictionary exploiting approximate linearity and repetitiveness.

LA-vector

Compressed bitvector/container supporting efficient random access and rank queries.

PyGM

Python library of sorted containers with state-of-the-art query performance and compressed memory usage.

PGM-index

Data structure enabling fast searches in arrays of billions of items using orders of magnitude less space than traditional indexes.

CSS-tree

C++11 implementation of the Cache Sensitive Search tree.

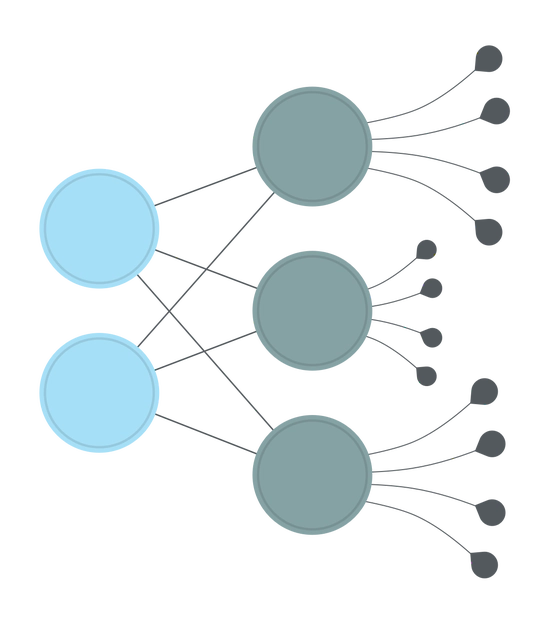

NN Weaver

Python library to build and train feedforward neural networks, with hyperparameters tuning capabilities.

Knowledge is like a sphere; the greater its volume, the larger its contact with the unknown.

― Blaise Pascal

Contact

- giorgio.vinciguerra@unipi.it

-

Room 324

Dipartimento di Informatica

Largo Bruno Pontecorvo 3

Pisa, 56127

Italy