I am a Research Fellow (academic rank in Italy: RTD-A) at the Department of Computer Science of the University of Pisa since January 2023.

My research interests include compact data structures, data compression, and algorithm engineering, with a focus on the so-called learned data structures, that is, data structures that exploit machine learning tools to uncover new regularities in the input data and achieve significantly improved space-time trade-offs over traditional ones.

I obtained my PhD from the University of Pisa in February 2022 with a thesis on Learning-based compressed data structures that was awarded the Best PhD thesis in Theoretical Computer Science by the Italian Chapter of the EATCS. Before my current position, I was a postdoc (2022) and PhD student (2018–21) at the University of Pisa. I was a visiting researcher at KTH Royal Institute of Technology (2024) and at Harvard University (2020).

In July 2025, I earned the Italian National Scientific Habilitation as Associate Professor in Computer Science.

Results of my research, including my software libraries, have found applications in database systems, information retrieval systems, and bioinformatics tools. Furthermore, I was granted a US and an Italian patent, owned by the University of Pisa.

My research is supported by the EU-funded project SoBigData.it. In the past, I was supported by the SoBigData++ and Multicriteria data structures projects.

Publications

Awards

- Best 2022 Italian PhD thesis in Theoretical Computer Science by the Italian Chapter of the EATCS

- WSDM 25 Outstanding Reviewer Award. Awarded to only 10/466 PC members

Service

Program committees:

VLDB 2026, SEA 2026, ECIR 2026, WSDM 2026, CIKM 2025, WSDM 2025, WSDM 2024, AIME 2024, BIBM 2024, WSDM 2023, BIBM 2023

Reproducibility committees:

SIGMOD 2025, SIGMOD 2024

Organising committees:

DSB 2025, SPIRE 2023

Journal reviewer:

IEEE Trans. Knowl. Data Eng., IEEE Trans. Cloud Comput., IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst., Inf. Syst., VLDB J., J. Inf. Secur. Appl., Software: Pract. Exp., Neurocomputing, PLOS ONE

Conference reviewer:

SODA 2026, SIGIR 2024, SPIRE 2023, SAND 2023, ALENEX 2022, DCC 2022, LATIN 2022

Talks

Teaching & Supervision

Teacher at the University of Pisa:

- Laboratorio 1 (3/12 ECTS). BSc in Computer Science. AYs 2025/26, 2024/25

- Programmazione e Algoritmica (3/15 ECTS). BSc in Computer Science. AYs 2024/25, 2023/24

- Information Retrieval (3/6 ECTS). MSc in Computer Science. AY 2023/24

Teaching assistant at the University of Pisa:

- Algorithm Engineering. MSc in Computer Science. AYs 2022/23, 2021/22, 2020/21

- Algoritmica e Laboratorio. BSc in Computer Science. AY 2018/19

Supervised and co-supervised students at the University of Pisa:

- MSc in Computer Science: Marco Costa (2022), Mariagiovanna Rotundo (2022), Antonio Boffa (2020), Lorenzo De Santis (2019)

- BSc in Computer Science: Giorgio Chelli (2025), Giuliano Gorgone (2025), Alessio Russo (2020)

Software

LearnedStaticFunction

A data structure to map a static set of keys to values, exploiting correlations to significantly reduce memory usage

LeMonHash

A monotone minimal perfect hash function that learns and leverages the data smoothness.

LZ$\phantom{}_{\boldsymbol\varepsilon}$

Compressed rank/select dictionary based on Lempel-Ziv and LA-vector compression.

LZ-End

Implementation of two LZ-End parsing algorithms.

PrefixPGM

Proof-of-concept extension of the PGM-index to support fixed-length strings.

RearCodedArray

Compressed string dictionary based on rear-coding.

Block-$\boldsymbol\varepsilon$ tree

Compressed rank/select dictionary exploiting approximate linearity and repetitiveness.

LA-vector

Compressed bitvector/container supporting efficient random access and rank queries.

PyGM

Python library of sorted containers with state-of-the-art query performance and compressed memory usage.

PGM-index

Data structure enabling fast searches in arrays of billions of items using orders of magnitude less space than traditional indexes.

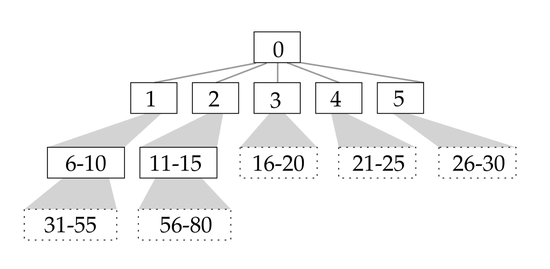

CSS-tree

C++11 implementation of the Cache Sensitive Search tree.

NN Weaver

Python library to build and train feedforward neural networks, with hyperparameters tuning capabilities.

Knowledge is like a sphere; the greater its volume, the larger its contact with the unknown.

― Blaise Pascal

Contact

- giorgio.vinciguerra@unipi.it

-

Room 324

Dipartimento di Informatica

Largo Bruno Pontecorvo 3

Pisa, 56127

Italy