Teaching

"Teaching is the highest form of understanding”. Aristotele

Thesis proposals

Interested students can ask me a thesis proposal in the following main areas

Data stream processing on heterogeneous systems (multi-core CPUs, GPUs and FPGAs)

Unconventional parallel programming approaches

Self-* adaptive supports for parallel processing (i.e., QoS- and Energy-aware computing on IoT/Edge devices, resource allocation)

Operating systems (impact of scheduling, thread mapping/pinning on parallel programs)

Parallel programming, parallel libraries and runtime supports

Please send me an email and we can talk in person or by Skype/Teams/Meet. Below a list of possible projects (description in Italian) that are currently available (others upon request, also for foreign students)

[Master/Bachelor] Analisi e studio di meccanismi di microbatching adattivo nel contesto del data stream processing. Download proposal.

[Master] Supporto di costrutti dataflow ciclici per il data streaming in WindFlow. Download proposal.

[Master/Bachelor] Realizzazione di un sistema di eventi temporizzati in una libreria di data stream processing in C++ (WindFlow). Download proposal.

[Master/Bachelor] Confronto tra strumenti di data stream processing su sistemi scale-up. Download proposal.

[Master/Bachelor] Confronto prestazionale tra supporti per il data stream processing in contesti di applicazioni con stato “larger-than-memory”. Download proposal.

[Master] Progettazione ed implementazione di una API ad alto livello tabellare per WindFlow. Download proposal.

[Master/Bachelor] Analisi del consumo energetico di strumenti di data stream processing su piattaforme Edge/IoT. Download proposal.

[Master/Bachelor] Studio dell’integrazione di sistemi di inferenza per AI/ML in strumenti di data stream processing. Download proposal.

[Master/Bachelor] Integration of Data Compression Techniques in Stateful Stream Processing at the Edge. Download proposal.

Teaching activities

I am currently teaching some courses in the Bachelor's Degree Programme in Computer Science (Laurea Triennale in Informatica) and the Master's Degree Programme in Computer Science (Laurea Magistrale in Informatica).

Accelerated Computing (0071A, 6 CFU)

Course Objectives

The Accelerated Computing (AC) course offers an in-depth exploration of heterogeneous parallel programming, a crucial area in modern computing that leverages diverse computing resources to achieve high performance and efficiency. Parallel computing is a prominent research theme in Computer Science and Engineering, focusing on the efficient exploitation of various processing devices, from Chip Multi-Processors (CMPs) and their composition in multi-CPU SMP/NUMA architectures to heterogeneous hardware solutions, including hardware accelerators such as GPUs and reconfigurable logic (e.g., FPGAs). Students will learn the principles and practices of heterogeneous parallel architectures and computing, focusing on both hardware and programming challenges and their interactions. Most of the teaching hours will be devoted to GPU programming, given their ability to accelerate various emerging workloads such as those in Big Data analytics and Machine Learning tasks.

The course adopts a bottom-up approach, presenting different heterogeneous parallel programming models to students. The architectural features of GPU devices are detailed (SIMD, SIMT, independent thread scheduling), and the CUDA (Compute Unified Device Architecture) programming model is introduced as a fundamental low-level programming methodology for NVIDIA GPUs. Students will learn various programming techniques to leverage GPU hardware (e.g., tiling, minimizing thread divergence, dynamic parallelism), with concrete applications to real-world problems in fields such as linear algebra, graph algorithms, and machine learning. High-level programming approaches to GPUs are also covered, focusing on improving code productivity and time-to-solution. Along this line, the course will concentrate on pragma-based programming models such as OpenMP.

Course Topics

Below is a list of the main topics studied in the course:

Introduction to the Course

Historical evolution of computing architectures

Flynn’s taxonomy

Heterogeneous computing platforms

Basics of Parallel Processing

Parallelism metrics and scalability laws

Stream parallelism and data parallelism

SIMD machines and GPUs

Array processors

SIMT and GPU architectures

General-purpose GPU computing

Programming GPU devices with CUDA

CUDA programming and execution model

CUDA memory hierarchy: global and shared memory

Host-device memory transfers

Optimizations (tiling, memory locality, access patterns and memory coalescing, minimizing thread divergence, dynamic parallelism)

Unified memory, pinned memory, zero-copy memory

CUDA streams, concurrency and CUDA events

Understanding CUDA debugger and profiler

CUDA libraries

Concrete applications in different fields such as linear algebra and graph algorithms

Programming GPU devices with pragma-based approaches

Basic introduction to OpenMP

OpenMP for GPU programming (overview, tasks, target construct, data movement, hierarchical parallelism)

Microsoft Teams. Students should subscribe to the official Team of the course using their UNIPI credentials. Use the following code to join the Team: XXXXX. Teaching material (e.g., slides, references to the textbooks) are available in the resources of the Team.

Architetture degli Elaboratori e Sistemi Operativi (725AA, prima parte 9 CFU)

Obiettivi del Corso

Il corso è un fondamentale annuale del secondo anno della Laurea Triennale in Informatica. Le tematiche vertono sulle Architetture dei Calcolatori (AE), mentre la seconda parte del corso è inerente alla progettazione e funzionamento dei Sistemi Operativi (SO). La parte di mia competenza corrisponde ai primi 9 CFU del corso, ovvero il programma di AE, corrispondente a 72 ore di didattica frontale pari a 36 lezioni spalmate nel primo semestre e ad inizio secondo semestre.

Argomenti del Corso

Nella prima parte del corso (9 CFU) di mia competenza verranno affrontati i seguenti argomenti:

Fondamenti dei sistemi di elaborazione (rappresentazione binaria di numeri interi e in virgola fissa/mobile, logica Booleana, tabelle di verità e semplificazione delle espressioni logiche)

Reti combinatorie e reti sequenziali

Linguaggi per la descrizione dell’hardware (in particolare Verilog per progettare reti combinatorie e sequenziali)

Linguaggio Assembler ARMv7

Microarchitettura base del processore ARMv7 (single-cycle)

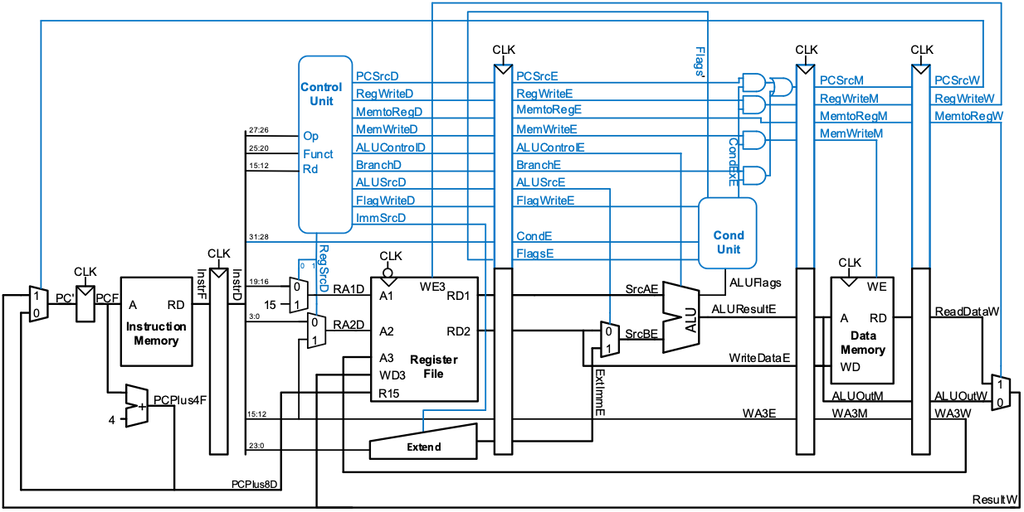

Microarchitetture avanzate per ARMv7 (multi-cycle e pipeline)

Cenni a processori avanzati (superscalari, hardware multithreading)

Gerarchie di memoria (organizzazione e funzionamento delle cache, principi di località e riuso, valutazione dell’impatto della gerarchia di memoria nell’esecuzione di programmi)

Gestione dell’ingresso-uscita (I/O), concetto di unità di I/O, memory-mapped I/O, cenni al funzionamento dei driver

Microsoft teams. Il Team del corso è disponibile con il seguente codice (iscriversi con le credenziali UNIPI): XXXXX. Il Team contiene anche il materiale didattico, compreso il riferimento ai libri di testo e il materiale integrativo.