QuickStart

FogBrain currently supports service placement and migration decisions, at first deployment and at application management time, by mainly focussing on services suffering due to the latest changes in infrastructure conditions (e.g. crash of a node hosting a service, too high latency between communicating services).

Application Placement Problem

FogBrain solves the application placement problem stated below, both at first deployment and at management time.

Let

Abe an application composed of interacting servicesS1, ..., Shwith their (hardware, software, IoT, communication) requirements and letIbe a Cloud-IoT infrastructure composed of interconnected nodesN1, ..., Nkfeaturing certain (hardware, software, IoT, communication) capabilities.An eligible placement for

AoverImaps each serviceSiofAto a certain nodeNjofIso that all service requirements are satisfied by node and networking capabilities.

For more details on this problem, you can have a look at this research article.

Declare an Application

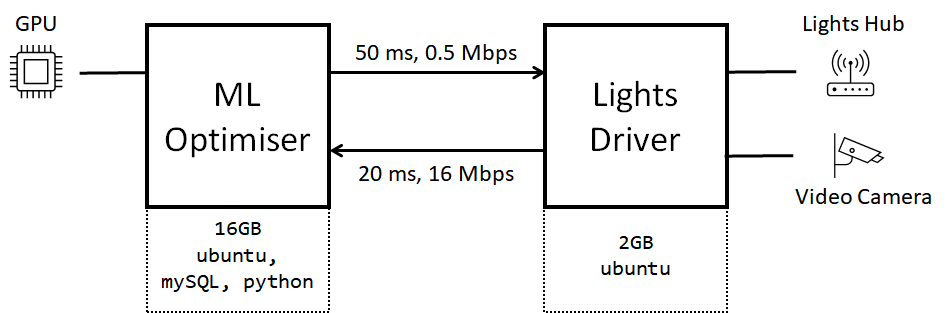

The application sketched below relies on machine learning to optimise home interior lighting based on data sensed from a videocamera and acting upon a smart lights hub. It is made of two interacting microservices: an ML Optimiser and a Lights Driver.

The ML Optimiser requires 16GB of RAM, the Ubuntu OS with mySQL and python, and the availability of a GPU at the target deployment node. The Lights Driver requires 2GB of RAM, the Ubuntu OS, and reachability of the video camera and of the lights hub from the target deployment node.

Besides, communication from the Lights Driver to the ML Optimiser tolerates a latency of at most 20 ms and requires the availability of at least 16 Mbps of bandwidth to livestream video footage. Similarly, communication from the ML Optimiser to the Lights Driver tolerates a latency of at most 50 ms and requires 0.5 Mbps of available bandwidth.

All such requirements can be declared in FogBrain as in:

% application(AppId, [ServiceIds]).

application(lightsApp, [mlOptimiser, lightsDriver]).

% service(ServiceId, [SoftwareRequirements], HardwareRequirements, IoTRequirements).

service(mlOptimiser, [mySQL, python, ubuntu], 16, [gpu]).

service(lightsDriver, [ubuntu], 2, [videocamera, lightshub]).

% s2s(ServiceId1, ServiceId2, MaxLatency, MinBandwidth)

s2s(mlOptimiser, lightsDriver, 50, 0.5).

s2s(lightsDriver, mlOptimiser, 20, 16).

Declare an infrastructure

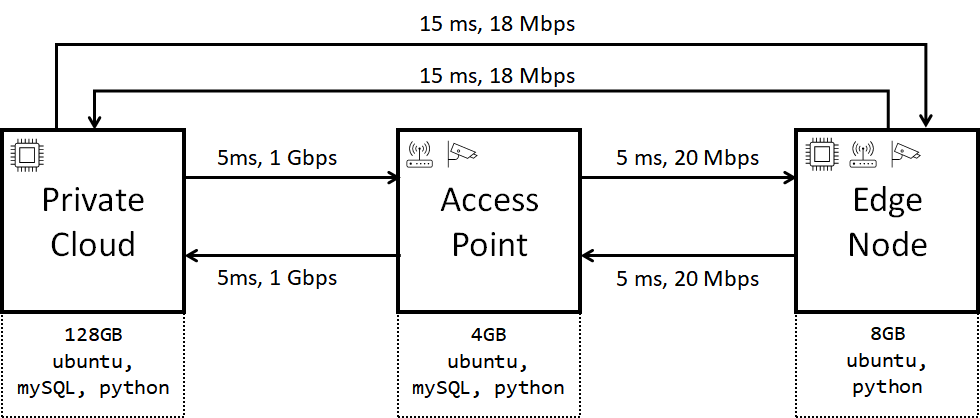

The infrastructure sketched below spans a Cloud-IoT continuum made of three nodes: a private Cloud VM, a Wifi access point and an edge computing node. GPUs are available at the private Cloud and at the edge node. The access point and the edge node also reach out a lights hub and a videocamera which can be used to deploy the previously described application. Each node features its own hardware and software capabilities and interconnects with the others with the latencies and bandwidth reported on top of end-to-end links.

All such capabilities can be declared in FogBrain as in:

% node(NodeId, SoftwareCapabilities, HardwareCapabilities, IoTCapabilities).

node(privateCloud,[ubuntu, mySQL, python], 128, [gpu]).

node(accesspoint,[ubuntu, mySQL, python], 4, [lightshub, videocamera]).

node(edgenode,[ubuntu, python], 8, [gpu, lightshub, videocamera]).

% link(NodeId1, NodeId2, FeaturedLatency, FeaturedBandwidth).

link(privateCloud, accesspoint, 5, 1000).

link(accesspoint, privateCloud, 5, 1000).

link(accesspoint, edgenode, 5, 20).

link(edgenode, accesspoint, 5, 20).

link(privateCloud, edgenode, 15, 18).

link(edgenode, privateCloud, 15, 18).

Try FogBrain!

FogBrain actually runs in the boxes below. The first box contains editable information about the application and infrastructure sketched above, the second box contains a query to fogBrain/2 which can be used to determine a first placement for the whole application, or to determine service migrations of services suffering due to changes in infrastructure conditions.

First Placement

:- use_module(library(lists)).

:- use_module(library(statistics)).

:- dynamic(deployment/4).

hwTh(0.5). % hardware to be kept free so to avoid nodes overloading

bwTh(0.2). % bandwidth to be kept free so to avoid links overloading

fogBrain(App, NewPlacement) :-

deployment(App, Placement, AllocHW, AllocBW),

reasoningStep(App, Placement, AllocHW, AllocBW, NewPlacement).

fogBrain(App, Placement) :-

\+deployment(App,_,_,_),

placement(App, Placement).

placement(App, Placement) :-

application(App, Services),

placement(Services, [], AllocHW, [], AllocBW, [], Placement).

placement([], AllocHW, AllocHW, AllocBW, AllocBW, Placement, Placement).

placement([S|Ss], AllocHW, NewAllocHW, AllocBW, NewAllocBW, Placement, NewPlacement) :-

servicePlacement(S, AllocHW, TAllocHW, N),

flowOK(S, N, Placement, AllocBW, TAllocBW),

placement(Ss, TAllocHW, NewAllocHW, TAllocBW, NewAllocBW, [on(S,N)|Placement], NewPlacement).

servicePlacement(S, AllocHW, NewAllocHW, N) :-

service(S, SWReqs, HWReqs, TReqs),

node(N, SWCaps, HWCaps, TCaps),

hwTh(T), HWCaps >= T + HWReqs,

thingReqsOK(TReqs, TCaps),

swReqsOK(SWReqs, SWCaps),

hwReqsOK(HWReqs, HWCaps, N, AllocHW, NewAllocHW).

thingReqsOK(TReqs, TCaps) :- subset(TReqs, TCaps).

swReqsOK(SWReqs, SWCaps) :- subset(SWReqs, SWCaps).

subset([], _).

subset([X|Xs], Y) :-

member(X,Y),

subset(Xs,Y).

hwReqsOK(HWReqs, HWCaps, N, [], [(N,HWReqs)]) :-

hwTh(T), HWCaps >= HWReqs + T.

hwReqsOK(HWReqs, HWCaps, N, [(N,AllocHW)|L], [(N,NewAllocHW)|L]) :-

NewAllocHW is AllocHW + HWReqs, hwTh(T), HWCaps >= NewAllocHW + T.

hwReqsOK(HWReqs, HWCaps, N, [(N1,AllocHW)|L], [(N1,AllocHW)|NewL]) :-

N \== N1, hwReqsOK(HWReqs, HWCaps, N, L, NewL).

flowOK(S, N, P, AllocBW, NewAllocBW) :-

findall(n2n(N1,N2,ReqLat,ReqBW), interested(N1,N2,ReqLat,ReqBW,S,N,P), Ss),

serviceFlowOK(Ss, AllocBW, NewAllocBW).

interested(N, N2, ReqLat, ReqBW, S, N, P) :-

s2s(S, S2, ReqLat, ReqBW), member(on(S2,N2), P), N\==N2.

interested(N1, N, ReqLat, ReqBW, S, N, P) :-

s2s(S1, S, ReqLat, ReqBW), member(on(S1,N1), P), N\==N1.

serviceFlowOK([], AllocBW, AllocBW).

serviceFlowOK([n2n(N1,N2,ReqLat,ReqBW)|Ss], AllocBW, NewAllocBW) :-

link(N1, N2, FeatLat, FeatBW),

FeatLat =< ReqLat,

bwOK(N1, N2, ReqBW, FeatBW, AllocBW, TAllocBW),

serviceFlowOK(Ss, TAllocBW, NewAllocBW).

bwOK(N1, N2, ReqBW, FeatBW, [], [(N1,N2,ReqBW)]):-

bwTh(T), FeatBW >= ReqBW + T.

bwOK(N1, N2, ReqBW, FeatBW, [(N1,N2,AllocBW)|L], [(N1,N2,NewAllocBW)|L]):-

NewAllocBW is ReqBW + AllocBW, bwTh(T), FeatBW >= NewAllocBW + T.

bwOK(N1, N2, ReqBW, FeatBW, [(N3,N4,AllocBW)|L], [(N3,N4,AllocBW)|NewL]):-

\+ (N1 == N3, N2 == N4), bwOK(N1,N2,ReqBW,FeatBW,L,NewL).

reasoningStep(App, Placement, AllocHW, AllocBW, NewPlacement) :-

toMigrate(Placement, ServicesToMigrate),

replacement(App, ServicesToMigrate, Placement, AllocHW, AllocBW, NewPlacement).

toMigrate(Placement, ServicesToMigrate) :-

findall((S,N,HWReqs), onSufferingNode(S,N,HWReqs,Placement), ServiceDescr1),

findall((SD1,SD2), onSufferingLinks(SD1,SD2,Placement), ServiceDescr2),

merge(ServiceDescr2, ServiceDescr1, ServicesToMigrate).

onSufferingNode(S, N, HWReqs, Placement) :-

member(on(S,N), Placement),

service(S, SWReqs, HWReqs, TReqs),

nodeProblem(N, SWReqs, TReqs).

nodeProblem(N, SWReqs, TReqs) :-

node(N, SWCaps, HWCaps, TCaps),

hwTh(T), \+ (HWCaps > T, thingReqsOK(TReqs,TCaps), swReqsOK(SWReqs,SWCaps)).

nodeProblem(N, _, _) :-

\+ node(N, _, _, _).

onSufferingLinks((S1,N1,HWReqs1),(S2,N2,HWReqs2),Placement) :-

member(on(S1,N1), Placement), member(on(S2,N2), Placement), N1 \== N2,

s2s(S1, S2, ReqLat, _),

communicationProblem(N1, N2, ReqLat),

service(S1, _, HWReqs1, _),

service(S2, _, HWReqs2, _).

communicationProblem(N1, N2, ReqLat) :-

link(N1, N2, FeatLat, FeatBW),

(FeatLat > ReqLat; bwTh(T), FeatBW < T).

communicationProblem(N1,N2,_) :-

\+ link(N1, N2, _, _).

merge([], L, L).

merge([(D1,D2)|Ds], L, NewL) :- merge2(D1, L, L1), merge2(D2, L1, L2), merge(Ds, L2, NewL).

merge2(D, [], [D]).

merge2(D, [D|L], [D|L]).

merge2(D1, [D2|L], [D2|NewL]) :- D1 \== D2, merge2(D1, L, NewL).

replacement(_, [], Placement, _, _, Placement).

replacement(A, ServicesToMigrate, Placement, AllocHW, AllocBW, NewPlacement) :-

ServicesToMigrate \== [],

findall(S, member((S,_,_), ServicesToMigrate), Services),

partialPlacement(Placement, Services, PPlacement),

freeHWAllocation(AllocHW, PAllocHW, ServicesToMigrate),

freeBWAllocation(AllocBW, PAllocBW, ServicesToMigrate, Placement),

placement(Services, PAllocHW, NewAllocHW, PAllocBW, NewAllocBW, PPlacement, NewPlacement).

partialPlacement([],_,[]).

partialPlacement([on(S,_)|P],Services,PPlacement) :-

member(S,Services), partialPlacement(P,Services,PPlacement).

partialPlacement([on(S,N)|P],Services,[on(S,N)|PPlacement]) :-

\+member(S,Services), partialPlacement(P,Services,PPlacement).

freeHWAllocation([], [], _).

freeHWAllocation([(N,AllocatedHW)|L], NewL, ServicesToMigrate) :-

sumNodeHWToFree(N, ServicesToMigrate, HWToFree),

NewAllocatedHW is AllocatedHW - HWToFree,

freeHWAllocation(L, TempL, ServicesToMigrate),

assemble((N,NewAllocatedHW), TempL, NewL).

sumNodeHWToFree(_, [], 0).

sumNodeHWToFree(N, [(_,N,H)|STMs], Tot) :- sumNodeHWToFree(N, STMs, HH), Tot is H+HH.

sumNodeHWToFree(N, [(_,N1,_)|STMs], H) :- N \== N1, sumNodeHWToFree(N, STMs, H).

assemble((_,NewAllocatedHW), L, L) :- NewAllocatedHW=:=0.

assemble((N, NewAllocatedHW), L, [(N,NewAllocatedHW)|L]) :- NewAllocatedHW>0.

freeBWAllocation([],[],_,_).

freeBWAllocation([(N1,N2,AllocBW)|L], NewL, ServicesToMigrate, Placement) :-

findall(BW, toFree(N1,N2,BW,ServicesToMigrate,Placement), BWs),

sumList(BWs,V), NewAllocBW is AllocBW-V,

freeBWAllocation(L, TempL, ServicesToMigrate, Placement),

assemble2((N1,N2,NewAllocBW), TempL, NewL).

toFree(N1,N2,B,Services,_) :-

member((S1,N1,_),Services), s2s(S1,S2,_,B), member((S2,N2,_),Services).

toFree(N1,N2,B,Services,P) :-

member((S1,N1,_),Services), s2s(S1,S2,_,B), \+member((S2,N2,_),Services), member(on(S2,N2),P).

toFree(N1,N2,B,Services,P) :-

member(on(S1,N1),P), \+member((S1,N1,_),Services), s2s(S1,S2,_,B), member((S2,N2,_),Services).

sumList([],0).

sumList([B|Bs],V) :- sumList(Bs,TempV), V is B+TempV.

assemble2((_,_,AllocatedBW), L, L) :- AllocatedBW =:= 0.

assemble2((N1,N2,AllocatedBW), L, [(N1,N2,AllocatedBW)|L]) :- AllocatedBW>0.

lightsApp, you simply need to specify all application requirements and target infrastructure capabilities in the box below:

% application(AppId, [ServiceIds]).

application(lightsApp, [mlOptimiser, lightsDriver]).

% service(ServiceId, [SoftwareRequirements], HardwareRequirements, IoTRequirements).

service(mlOptimiser, [mySQL, python, ubuntu], 16, [gpu]).

service(lightsDriver, [ubuntu], 2, [videocamera, lightshub]).

% s2s(ServiceId1, ServiceId2, MaxLatency, MinBandwidth)

s2s(mlOptimiser, lightsDriver, 50, 0.5).

s2s(lightsDriver, mlOptimiser, 20, 16).

% node(NodeId, SoftwareCapabilities, HardwareCapabilities, IoTCapabilities).

node(privateCloud,[ubuntu, mySQL, python], 128, [gpu]).

node(accesspoint,[ubuntu, mySQL, python], 4, [lightshub, videocamera]).

node(edgenode,[ubuntu, python], 8, [gpu, lightshub, videocamera]).

% link(NodeId1, NodeId2, FeaturedLatency, FeaturedBandwidth).

link(privateCloud, accesspoint, 5, 1000).

link(accesspoint, privateCloud, 5, 1000).

link(accesspoint, edgenode, 5, 20).

link(edgenode, accesspoint, 5, 20).

link(privateCloud, edgenode, 15, 18).

link(edgenode, privateCloud, 15, 18).

Then issue a query to fogBrain/2 (delete and write again the . after the query) to see output eligible first placements for lightsApp:

fogBrain(lightsApp,Placement).

FogBrain asserts the first determined eligible placement as its default behaviour.

Migration with Continuous Reasoning

To trigger continuous reasoning we assume the application deployment below (the first found) is currently running and it has been asserted as a fact in the FogBrain knowledge base:

deployment(lightsApp, % AppId

[on(lightsDriver,accesspoint),on(mlOptimiser,privateCloud)], % Placement

[(privateCloud,16),(accesspoint,2)], % AllocHW

[(accesspoint,privateCloud,16),(privateCloud,accesspoint,0.5)]). % AllocBW

As shown above, FogBrain represents asserted deployments as deployment/4 facts, containing information on the current Placement of application services, and on the hardware and bandwidth resources it requires on the nodes and end to end links, viz. AllocHW and AllocBW.

Copy and paste the deployment/4 fact in the first box below. Now try to change the infrastructure so to cause the need for migration of the lightsDriver, e.g. by removing the lightshub from the list of IoT capabilities of the accesspoint:

% application(AppId, [ServiceIds]).

application(lightsApp, [mlOptimiser, lightsDriver]).

% service(ServiceId, [SoftwareRequirements], HardwareRequirements, IoTRequirements).

service(mlOptimiser, [mySQL, python, ubuntu], 16, [gpu]).

service(lightsDriver, [ubuntu], 2, [videocamera, lightshub]).

% s2s(ServiceId1, ServiceId2, MaxLatency, MinBandwidth)

s2s(mlOptimiser, lightsDriver, 50, 0.5).

s2s(lightsDriver, mlOptimiser, 20, 16).

% node(NodeId, SoftwareCapabilities, HardwareCapabilities, IoTCapabilities).

node(privateCloud,[ubuntu, mySQL, python], 128, [gpu]).

node(accesspoint,[ubuntu, mySQL, python], 4, [lightshub, videocamera]).

node(edgenode,[ubuntu, python], 8, [gpu, lightshub, videocamera]).

% link(NodeId1, NodeId2, FeaturedLatency, FeaturedBandwidth).

link(privateCloud, accesspoint, 5, 1000).

link(accesspoint, privateCloud, 5, 1000).

link(accesspoint, edgenode, 5, 20).

link(edgenode, accesspoint, 5, 20).

link(privateCloud, edgenode, 15, 18).

link(edgenode, privateCloud, 15, 18).

% copy & paste the deployment/4 for lightsApp here:

After you changed the infrastructure, query the fogBrain/2 predicate again (delete and write again the . after the query) to trigger continuous reasoning and to determine a new placement only for the lightsDriver service (e.g. migrating it from the accesspoint to the edgenode):

fogBrain(lightsApp,Placement).

After playing with this QuickStart tutorial, you can download FogBrain from our GitHub repository and browse its online docs here.

About This Site

This site is live and interactive powered by the klipse plugin:

- Live: The code is executed in your browser

- Interactive: You can modify the code and it is evaluated as you type

© 2020 Service-Oriented Cloud & Fog Computing Research Group, Department of Computer Science, University of Pisa, Italy